Digital Tools for Research

Throughout your research journey, leveraging digital tools can be advantageous, aiding you from initial planning to final presentation. Whether you lean towards paper-based methods or embrace a hybrid approach combining both digital and traditional tools, this blog post from Kelly Trivedy offers insights to help you explore and experiment with new tools effectively!

From Social Science to Data Science

How can you use data science in social science research? Find an interview with the Oxford Internet Institute’s Dr. Bernie Hogan and lots of useful resources in this post.

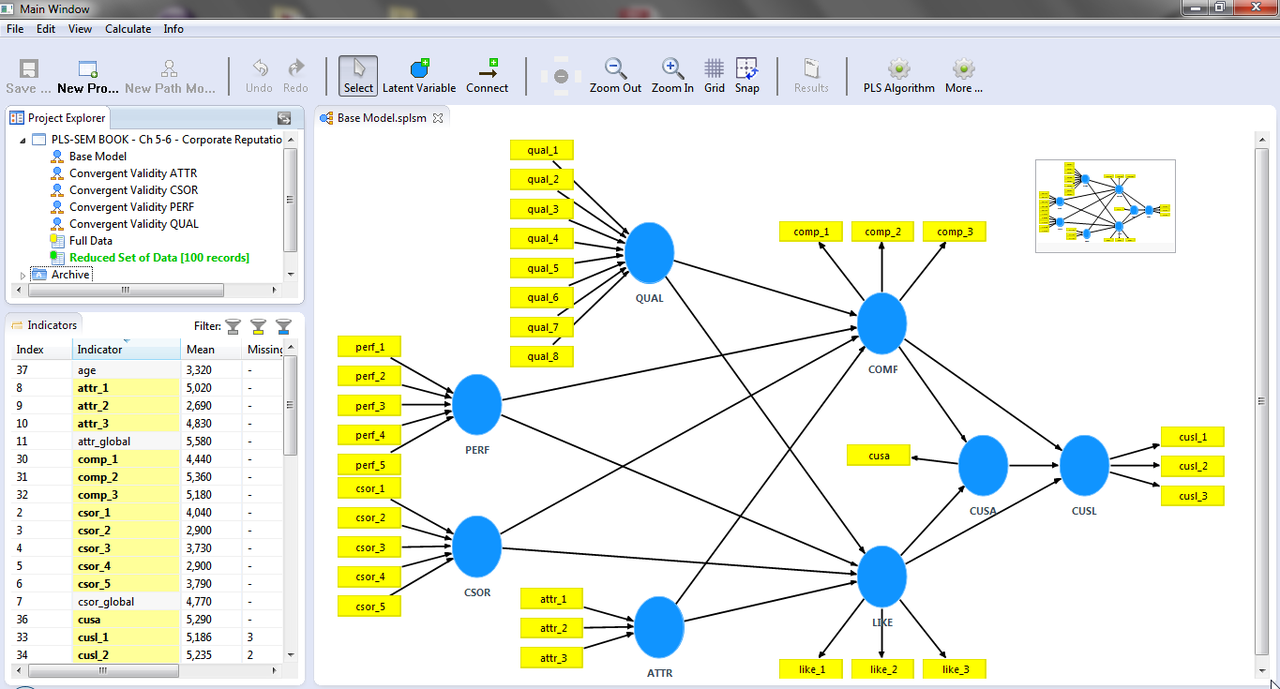

Partial Least Squares Structural Equation Modeling: An Emerging Tool in Research

Partial least squares structural equation modeling (PLS-SEM) enables researchers to model and estimate complex cause-effects relationship models

Discovering ACEP: Use R for Content Analysis in Spanish

ACEP is an R package designed specifically for content analysis in Spanish. Learn about it and download the package.

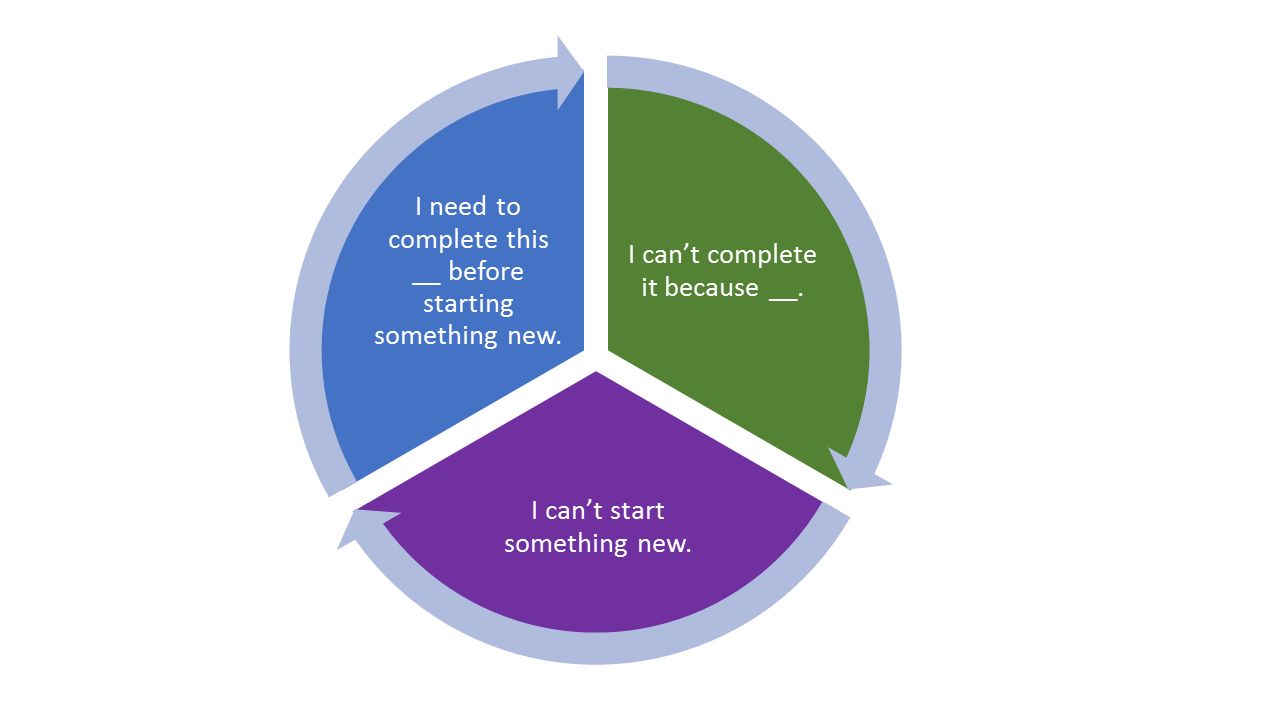

Revive an Old Writing Project, or Let It Die?

Look candidly at your unfinished project. Is it a stepping stone, and completion will be allow you to move ahead? Or is it an obstacle that prevents you from moving forward? Find ideas to help you determine whether to revive that piece of writing or let it go.

Keeping Writing Projects Alive

Have a writing project that is languishing? Find practical tips for keeping it alive!

Digital Workflows: Special Issue Roundtable 2

Jessica Lester and Trena Paulus co-edited a December 2023 special issue for the Sage journal, Qualitative Inquiry, “Qualitative inquiry in the 20/20s: Exploring methodological consequences of digital research workflows.” Read the articles and watch a roundtable with contributors. This is the second of two discussions of the special issue.

Digital Workflows: Special Issue Roundtable 1

Jessica Lester and Trena Paulus co-edited a December 2023 special issue for the Sage journal, Qualitative Inquiry, “Qualitative inquiry in the 20/20s: Exploring methodological consequences of digital research workflows.” Read the articles and watch a roundtable with contributors. This is the first of two discussions of the special issue.

Organize Your Writing Projects

Celebrate Academic Writing Month 2023 by getting organized! Find open-access resources to help you avoid being distracted by details and lost files.

A Case for Teaching Methods

Find an 10-step process for using research cases to teach methods with learning activities for individual students, teams, or small groups. (Or use the approach yourself!)

Become a Productive Academic Writer

Get ready for #AcWriMo! Find a checklist that will help you overcome obstacles that keep you from making progress with academic writing.

‘Technological reflexivity’ in qualitative research design

While reflexivity is a concept quite familiar to qualitative researchers, the idea of ‘technological reflexivity’ may be less so. Find information about October 2023 events and articles.

Sage Concept Grants: Shop Builder from Gorilla

The Shop Builder is a unique product that allows researchers to easily create a simulated and interactive online shop to study consumer behaviour.

Sage Concept Grants: Annotiva

Qualitative researchers face ongoing challenges in consistently identifying and addressing potential ambiguity in collaborative data annotation processes. Annotiva can help.

Sage Concept Grants: Swara

Swara is a web-based/mobile self-completion survey software/application that utilizes voice user interface technology.

Sage Concept Grants: GailBot

GailBot is an automated dialogue transcription tool that uses machine learning and heuristics to annotate essential paralinguistic details of talk such as overlaps, sound stretches, speed changes, and timed silences.

The five pitfalls of coding and labeling - and how to avoid them

Whether you call it ‘content analysis’, ‘textual data labeling’, ‘hand-coding’, or ‘tagging’, a lot more researchers and data science teams are starting up annotation projects these days. Learn how to avoid potential pitfalls.

Starting out with qualitative analysis software

Learn some important steps about using Quirkos software for qualitative data analysis.